How to Set up Mistral AI - 7B on AWS Easily via AMI: OpenAI and API Compatible

Introduction

In the dynamic world of artificial intelligence (AI), Mistral AI has emerged as a standout name, synonymous with innovation, performance, and adaptability. Renowned for its advanced capabilities within the large language models (LLMs) landscape, Mistral AI is redefining what's possible in AI technology. It is not just an AI model; it's a catalyst for change, pushing the boundaries for developers and businesses, and paving new pathways in the AI sector.

This guide delves deep into the world of Mistral AI, exploring its essential features and the significant impact it brings to the AI industry. We focus particularly on the practical aspects of integrating Mistral AI within your technological infrastructure, highlighting the streamlined process of deploying this advanced model on Amazon Web Services (AWS) using Amazon Machine Images (AMI). Tailored for both experienced developers and AI enthusiasts beginning their journey, this article provides a thorough walkthrough of setting up Mistral AI on AWS. Our aim is to offer a detailed, user-friendly guide that ensures a seamless and effective integration experience.

Embark on this journey to unlock the full potential of Mistral AI, and discover how its deployment on AWS can significantly elevate your AI projects.

A to Z Full Installation Guide by Developers

What is Mistral AI?

Mistral AI stands as a groundbreaking innovation in the field of artificial intelligence. It represents a significant leap forward in the development of large language models (LLMs), known for its extraordinary capabilities and efficiency. Mistral AI is not just another tool in the AI toolkit; it's a game-changer that redefines the standards and possibilities in AI technology.

At its core, Mistral AI is a highly advanced generative text model, boasting an impressive array of features that set it apart from its contemporaries. It's renowned for its computational efficiency, a crucial factor that makes it both powerful and accessible. This efficiency doesn't come at the expense of performance; on the contrary, Mistral AI delivers remarkable accuracy and depth in language understanding and generation, making it an ideal choice for a wide range of AI-driven applications.

One of the key aspects that make Mistral AI stand out is its OpenAI compatibility. This compatibility ensures that Mistral AI can seamlessly integrate with the OpenAI framework, a leading platform in AI research and applications. This integration opens up a world of possibilities, allowing developers to leverage the best of both Mistral AI and OpenAI's capabilities.

Moreover, Mistral AI is designed with API integration in mind, particularly optimized for ARM64 architecture. This focus on API integration means that Mistral AI can easily interact with a variety of applications and services, enhancing its versatility and applicability in different scenarios. Whether it's for natural language processing, content creation, or more complex AI tasks, Mistral AI's API integration feature ensures that it can be a valuable asset in any technological ecosystem.

Mistral AI is more than just an AI model; it's a comprehensive solution that combines efficiency, performance, and versatility. Its compatibility with OpenAI and robust API integration capabilities make it an essential tool for anyone looking to harness the power of AI in their projects or research.

Benefits of Using Mistral AI on AWS with Meetrix's AMI

Deploying Mistral AI on Amazon Web Services (AWS) via Meetrix's Amazon Machine Image (AMI) offers a multitude of advantages, streamlining AI integrations and enhancing efficiency for businesses and developers alike. Here's an overview of the key benefits:

1. Simplified Deployment Process

- The use of Meetrix's AMI for Mistral AI eliminates complex setup processes. With the AMI, users can deploy Mistral AI on AWS in just a few clicks, significantly reducing the time and technical know-how required for setup.

- This simplicity is especially beneficial for teams with limited resources or those new to AI, allowing them to bypass the steep learning curve typically associated with AI model deployment.

2. Optimized for AWS Environment

- Mistral AI, when deployed through Meetrix's AMI, is fully optimized for the AWS infrastructure. This ensures that the model runs efficiently, taking full advantage of AWS's robust and scalable cloud environment.

- This optimization means better performance, quicker response times, and more reliable AI operations, crucial for demanding AI applications.

3. Seamless Integration and Compatibility

- The integration of Mistral AI with AWS through Meetrix's AMI is seamless, ensuring compatibility with various AWS services and tools. This integration opens the door to a vast array of functionalities and services offered by AWS, from data storage solutions like Amazon S3 to advanced analytics with AWS Lambda.

- Such integration not only broadens the scope of what can be achieved with Mistral AI but also simplifies the process of incorporating AI into existing AWS-based systems.

4. Cost-Effective and Scalable

- Deploying Mistral AI via Meetrix's AMI on AWS is a cost-effective solution. Users pay only for the AWS resources they use, without any additional overhead for the AI model itself. This pay-as-you-go model makes it an attractive option for businesses of all sizes.

- AWS’s scalability means that as your AI demands grow, you can easily scale your Mistral AI deployment without any significant changes to your existing setup.

5. Enhanced Security and Compliance

- AWS is known for its stringent security measures. By deploying Mistral AI on AWS, users benefit from the platform's robust security protocols, ensuring that their AI applications and data are well-protected.

- Compliance with various data protection and privacy laws is also streamlined, as AWS's global infrastructure is designed to meet the highest standards of compliance.

6. Access to AWS Support and Resources

- Users of Mistral AI on AWS gain access to a wealth of resources and support from AWS. This includes detailed documentation, community forums, and professional support from AWS experts, which can be invaluable for troubleshooting and maximizing the potential of Mistral AI.

7. GDPR Security with Self-Hosting for Mistral AI - 7B

- Enhanced Data Control: By self-hosting Mistral AI - 7B with Meetrix's AMI on AWS, users gain unparalleled control over their data, ensuring compliance with GDPR's privacy standards by managing data processing and storage in-house.

- Customized Data Protection: Self-hosting Mistral AI - 7B allows for the implementation of bespoke data protection measures, such as secure data encryption and access controls, to safeguard sensitive AI-generated content and user inputs.

8. Commercial Support for Mistral AI - 7B

- Dedicated Setup and Optimization Support: Meetrix offers comprehensive commercial support for Mistral AI - 7B, guiding users through the installation process, optimizing performance, and ensuring the AI model runs efficiently on AWS.

- API Integration Assistance: To extend Mistral AI - 7B's capabilities, Meetrix provides support for API integration, allowing users to seamlessly connect the AI model with their existing applications and systems for enhanced functionality.

- Customization for Specific Needs: With Meetrix's commercial support, businesses can customize Mistral AI - 7B to meet their specific requirements, whether for content generation, data analysis, or other AI-driven tasks, maximizing the model's utility and effectiveness.

- Reliable GDPR Guidance: Meetrix assists organizations in aligning their Mistral AI - 7B deployment with GDPR compliance, offering expert advice on data handling practices and privacy measures to protect user information and maintain regulatory compliance.

Leveraging Mistral AI on AWS with Meetrix's AMI presents a powerful combination of ease of use, efficiency, and scalability. It's an ideal solution for businesses and developers seeking to harness the power of AI without the typical complexities associated with deploying and managing AI models.

Mistral AI vs GPT-3 vs LLaMA vs BARD vs BERT AI Model Comparison

Mistral AI vs GPT-3 (OpenAI)

Mistral AI, with its 7 billion parameters, offers a compact yet powerful solution for AI applications, particularly excelling in natural coding abilities. In contrast, OpenAI's GPT-3, known for its massive 175 billion parameters, is a behemoth in natural language understanding and generation. While GPT-3 shines in creating human-like text, its large model size can be resource-intensive. Mistral AI, meanwhile, provides a more accessible and customizable platform, appealing especially to those seeking open-source solutions and easy integration.

- Model Size: Mistral AI is smaller but efficient; GPT-3 is much larger, offering expansive language capabilities.

- Performance: Both excel in their domains; Mistral AI in coding tasks, GPT-3 in general language tasks.

- Accessibility: Mistral AI is open-source and user-friendly; GPT-3 requires API access and is less open for modifications.

Mistral AI vs LLaMA

Mistral AI and LLaMA both stand as prominent figures in the AI landscape. Mistral AI's focus on adaptability across diverse use cases makes it a versatile choice. Llama, with its focus on extensive language modeling, excels in large-scale language applications. While LLaMA brings depth and breadth in language understanding, Mistral AI offers a balance, making it particularly efficient in environments where model size and resource utilization are considerations.

- Capabilities: Mistral AI is adaptable; LLaMA is more specialized in large-scale language tasks.

- Open-Source Aspect: Both models promote open-source use but with different approaches to training and applications.

Mistral AI vs LLaMA

Mistral AI and BERT are both built on transformer architecture, yet they serve different niches in the AI world. Mistral AI's adaptability allows it to perform a variety of tasks, from generative to analytical. BERT, being one of the first models to utilize bidirectional context, is more focused on understanding human language, particularly in tasks like sentiment analysis and question answering.

- Model Architecture: Both use transformers, but with different focuses and implementations.

- Use Cases: Mistral AI is versatile; BERT excels in specific NLP tasks like sentiment analysis.

Mistral AI vs Bard AI

Mistral AI, known for its 7 billion parameter model, is celebrated for its efficiency in a variety of AI applications, especially in coding. Bard AI, on the other hand, is a recent entrant in the AI landscape, developed by Google. While detailed specifics of Bard AI are not as publicly documented as other models, it's known for its conversational capabilities and integration with Google's vast data resources. Bard AI focuses on providing up-to-date, accurate information in a conversational format. Mistral AI's strength lies in its open-source nature and versatility, especially in coding tasks and custom applications.

- Model Size: Mistral AI is efficient with 7 billion parameters; Bard AI's parameter count is not publicly known but is designed for conversational AI.

- Performance: Mistral AI excels in coding and versatility; Bard AI is focused on delivering conversational responses with up-to-date information.

- Integration: Mistral AI is adaptable to various environments; Bard AI is likely to be integrated with Google's ecosystem.

AI Model Comparison Table (Mistral AI vs GPT-3 vs LLaMA vs BARD vs BERT AI)

Prerequisites for Mistral AI Setup on AWS

Before embarking on the journey of setting up Mistral AI on Amazon Web Services (AWS) using Meetrix's AMI, it is crucial to ensure that certain prerequisites are met. These prerequisites lay the groundwork for a successful and seamless deployment. Here's a breakdown:

1. Basic Understanding of AWS Services:

- Familiarity with EC2 (Elastic Compute Cloud): Knowledge of how to launch and manage EC2 instances, as Mistral AI will be deployed on these virtual servers.

- Understanding of AWS IAM (Identity and Access Management): Awareness of how to manage access to AWS services and resources securely.

- Insight into AWS VPC (Virtual Private Cloud): Basic knowledge of networking on AWS, including setting up VPCs, subnets, and security groups.

2. Active AWS Account

- Account Setup: Ensure you have an active AWS account. If not, sign up on the AWS website. Keep your login credentials secure and handy.

- Billing Setup: Confirm that your billing details are accurate and up-to-date to avoid any service interruptions.

3. AWS Permissions and Security Protocols

- IAM Roles and Policies: Ensure you have the necessary permissions to create and manage EC2 instances, VPCs, and other AWS resources.

- Security Best Practices: Familiarize yourself with AWS security best practices, such as securing your root account and using IAM roles.

4. Basic Knowledge of AMIs (Amazon Machine Images)

- Understanding AMIs: Knowledge of what an AMI is and how it is used to create virtual servers on EC2.

- Locating and Launching AMIs: Familiarity with finding specific AMIs on AWS Marketplace and launching instances using these images.

6. Networking Basics

- IP Addressing and DNS: Basic knowledge of IP addressing and how to configure DNS settings within AWS.

- Route 53 Familiarity: If using AWS Route 53 for domain name services, an understanding of how to configure and manage it.

7. Software and Development Skills (Optional but Beneficial)

- Programming Knowledge: Basic programming skills, particularly if planning to integrate Mistral AI with custom applications or perform advanced configurations.

- API Integration: Understanding of how to work with APIs, which will be useful if you plan to leverage Mistral AI’s API capabilities.

How to install Mistral AI on AWS via Meetrix’s AMI

Video Guide for Quick Setup

1. Finding and Selecting Mistral AI AMI in AWS Marketplace:

- Log into AWS Management Console: Access the AWS Management Console and sign in with your credentials.

- Navigate to AWS Marketplace: Search for 'Mistral AI' in the AWS Marketplace.

Mistral 7B Multi Model LLM Bundle for ARM64

Mistral 7B Multi Model LLM Bundle for x86

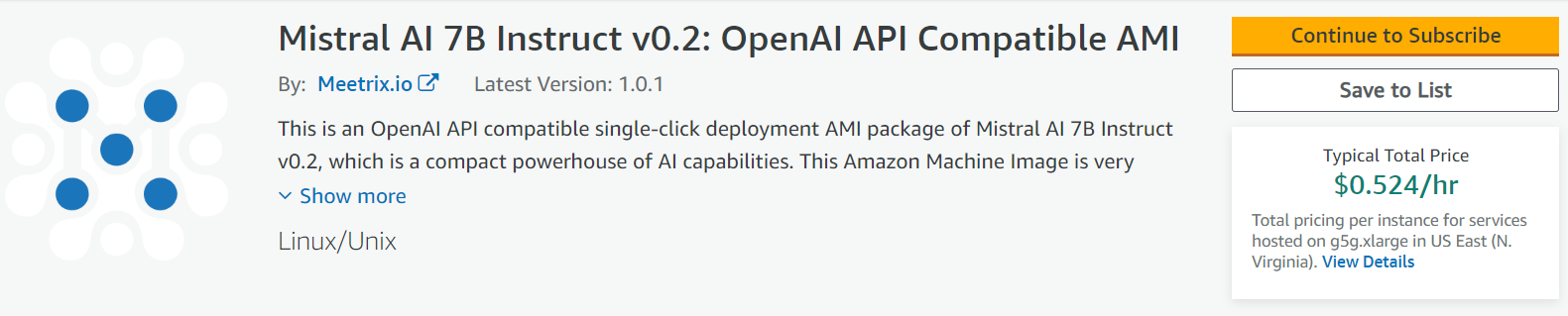

- Select the Mistral AI Product: Choose the appropriate Mistral AI AMI from the listed options.

2. Initial Setup and Configuration:

- Subscribe to Mistral AI AMI: Click on 'Continue to Subscribe' to initiate the process.

- Accept Terms and Conditions: Review and accept the terms to proceed with the subscription.

- Configuration Settings:

- After subscription, select 'Continue to Configuration'.

- Choose your preferred AWS region for deployment.

3. Launching CloudFormation for Mistral AI:

- Select Launch Option: In the 'Launch this software' page, choose 'Launch CloudFormation' from the dropdown menu.

- Click 'Launch': Begin the CloudFormation stack creation process.

4. Creating and Configuring a CloudFormation Stack:

- Prepare the Template:

- Ensure 'Template is ready' is selected.

- Click 'Next'.

- Specify Stack Options:

- Enter a unique 'Stack name'.

- Provide an 'Admin Email' for SSL certificate generation.

- Choose a deployment name and key name.

- Specify 'MistralDomainName' for SSL setup (ensure domain is hosted on AWS Route 53).

- Select the recommended instance type (e.g., g5g.xlarge).

- Set networking configurations like 'SSHLocation', 'SubnetCidrBlock', and 'VpcCidrBlock'.

- Configure Stack Options:

- Choose to 'Roll back all stack resources' in case of failure.

- Review and Submit:

- Verify entered details.

- Acknowledge IAM resource creation.

- Click 'Submit' to create the stack.

5. DNS Configuration and SSL Setup:

- Copy Public IP Address:

- After stack creation, go to the 'Outputs' tab and copy the 'PublicIp'.

- Update DNS Records:

- In AWS Route 53, navigate to 'Hosted Zones' and select your domain.

- Edit the DNS record, pasting the 'PublicIp'.

- Manual SSL Setup (If Needed):

- Follow additional steps to manually generate SSL if the automatic setup fails.

6. Accessing Mistral AI:

- Access the Mistral AI application using the 'DashboardUrl' provided in the 'Outputs' tab.

7. Shutting Down and Removal:

- Stop the Mistral Instance:

- Via the EC2 'Instance state' options, stop the Mistral instance.

- Remove the Stack:

- Delete the CloudFormation stack if needed from the AWS Management Console.

8. Technical Support and API Documentation:

- For Support: Contact Meetrix Support at support@meetrix.io for any assistance.

- API Documentation: Refer to the provided API documentation for advanced functionalities and integrations.

Manual SSL Generation (If Needed - More )

1. Pre-Setup Checks:

- Ensure Domain Hosting on Route53:

- Verify that your domain is hosted on AWS Route 53.

- Automatic Setup Attempt:

- If automatic SSL setup fails, proceed with manual setup.

2. Manual SSL Setup:

- Connect to Your Server:

- SSH into your server using the command: ssh -i <your-key-name> ubuntu@<Public-IP>.

- Execute SSL Script:

- Run the command: sudo /root/certificate_generate_standalone.sh.

- Enter Admin Email:

- Provide the admin email when prompted for SSL certificate generation.

Utilizing Mistral AI's API for Advanced Integration ( More )

1. Overview of API Integration:

- API Capabilities:

- Mistral AI offers a robust API, enabling seamless integration with various applications and custom development.

2. Integration Examples:

- Custom Application Development:

- Developers can leverage the API to build custom applications or enhance existing ones with Mistral AI's capabilities.

- Example Use-Cases:

- Integrating Mistral AI into a chatbot application for enhanced conversational abilities.

- Utilizing Mistral AI for content generation in content management systems.

- API Endpoints:

- Utilize endpoints like /v1/completions or /v1/embeddings for specific functionalities.

3. Getting Started with API:

- Access API Documentation: Refer to the detailed API documentation provided in the developer guide.

- Authentication and Requests: Use BasicAuth for authentication and follow the request format as per the documentation.

- Testing and Deployment: Test the API integrations in a development environment before deploying to production.

Troubleshooting and Support for Mistral AI Setup

1. Common Challenges in Setting Up Mistral AI:

- Issue - Dashboard Not Accessible:

- Solution: Check if the 'PublicIp' is correctly updated in DNS settings and verify the network accessibility.

- Issue: SSL Setup Failures:

- Solution: Ensure the domain is correctly hosted on Route53 and manually run the SSL generation script if needed.

- Issue: API Connection Errors:

- Solution: Validate API endpoint URLs and credentials. Ensure network rules allow API communications.

2. Accessing Technical Support:

- Meetrix Support:

- Contact Meetrix Support at support@meetrix.io for personalized assistance.

- AWS Support:

- Utilize AWS Support for issues related to AWS services like EC2, Route53, or CloudFormation.

- Community Forums:

- Engage with community forums for shared experiences and solutions.

3. Additional Resources:

- Developer Guides:

- Refer to the 'Mistral- Developer Guide' for in-depth setup and configuration instructions.

- API Documentation:

- Utilize the comprehensive API documentation for advanced integrations and custom application development.

- Online Tutorials and Blogs:

- Explore online resources, tutorials, and blogs for additional insights and best practices.

- Referring Video :

Cost-Efficiency Analysis of Deploying Mistral AI on AWS

Deploying Mistral AI on AWS, particularly with Meetrix's AMI, offers a blend of advanced AI capabilities and cost efficiency. This analysis explores the cost implications of such a deployment, compares different AWS instance types for running Mistral AI, and provides strategies for optimizing operations to be budget-friendly.

Key Cost Considerations:

- Software Costs:

- Mistral AI's software cost is pegged at $0.104/hr, applicable across various EC2 instance types.

- Infrastructure Costs:

- Costs vary based on the EC2 instance type chosen, influenced by factors like processing power, memory, and other specifications.

- Instance Type Variations:

- g5g.xlarge (Recommended): $0.524/hr

Comparative Cost Analysis:

Optimization Strategies:

- Right-Sizing Instances: Select an instance type that aligns with your processing needs to avoid overpaying for unutilized resources.

- Spot Instances: Consider using AWS Spot Instances for non-critical, flexible tasks to significantly cut costs.

- Reserved Instances: For consistent, long-term use, Reserved Instances offer substantial savings over standard pricing.

- Regular Monitoring: Utilize AWS monitoring tools to track usage and optimize resource allocation.

Balancing Performance and Cost:

- Higher-spec instances offer better performance but at a higher cost. Assess your specific AI needs to find the right balance.

- Consider starting with a lower-spec instance and scaling up as needed.

Leveraging CloudFormation Templates:

- Mistral AI's CloudFormation templates can streamline deployment and help manage costs more effectively.

The cost of deploying Mistral AI on AWS can be managed effectively through the careful selection of EC2 instances and the application of cost-optimization strategies. By understanding the specific needs of your AI application and regularly monitoring your AWS usage, you can maintain a balance between performance requirements and budget constraints.