How to Install Stable Diffusion on Cloud AWS GPU: AI in Cloud Computing

Introduction

In the rapidly advancing realm of artificial intelligence, Stable Diffusion has emerged as a breakthrough in the text-to-image synthesis arena. Text-to-image generation once considered an intricate domain, has witnessed the formidable capabilities of Stable Diffusion. This revolutionary model transforms descriptive textual content into striking visual imagery, paving the way for a myriad of applications - from digital art creation to assisting in academic research.

However, the true prowess of Stable Diffusion is significantly magnified when harnessed on a cloud GPU. The reason is twofold: first, the sheer computational power that cloud GPUs offer expedites the processing, making the synthesis not only faster but also more efficient. Second, leveraging cloud infrastructure alleviates the need for individuals and businesses to invest in expensive hardware setups. It democratizes access to state-of-the-art AI capabilities, allowing enthusiasts and professionals alike to venture into text-to-image generation without substantial upfront costs.

Prerequisites

Before diving into the nuances of deploying Stable Diffusion on cloud GPUs, it's essential to be equipped with a foundational understanding of the subject:

Basic Knowledge Requirements

- A rudimentary grasp of AI and machine learning concepts.

- Familiarity with cloud computing and its benefits.

- Understanding of GPU architectures and their role in AI computations.

Tools and Resources:

- Stable Diffusion's official documentation and source code.

- Cloud service provider SDKs or APIs, depending on the chosen platform.

- GPU-enabled virtual machine images or instances.

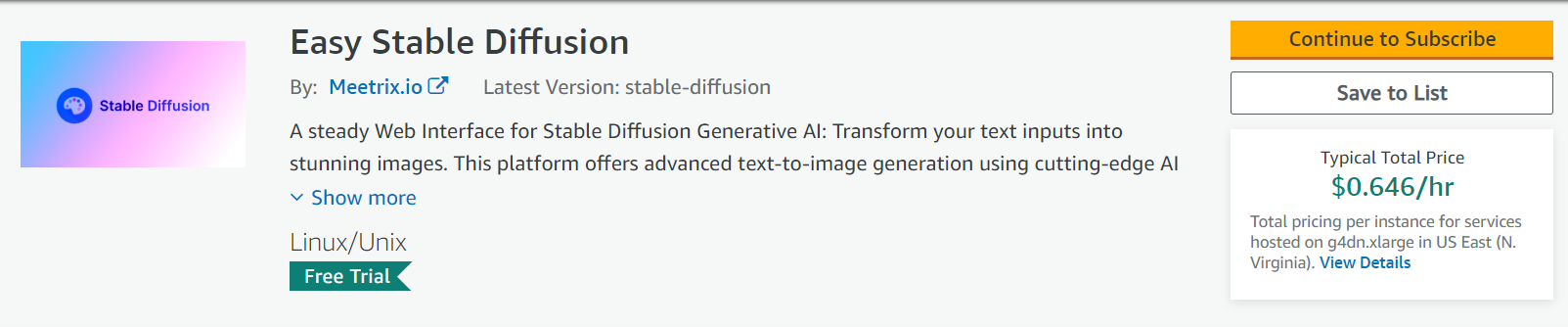

Benefits of using preconfigured Stable Deffiusion AMI

- One-Click Deployment: Explaining how Fooocus uses Stable Diffusion to enable the effortless setup.

- Fully Pre-configured AMI: The role of pre-configured AMI in making Stable Diffusion more accessible and user-friendly.

- Low-Cost SaaS SME Solution: How Stable Diffusion contributes to making Fooocus a cost-effective solution for small and medium enterprises.

- Unbeatable Pricing: The value for money that Stable Diffusion technology offers, which allows Fooocus to reduce operational costs by 75%.

- Hassle-Free Experience: Discuss how Stable Diffusion technology takes care of complex processes so users can focus on what truly matters.

- Proven Reliability: Highlighting the extensive testing that Stable Diffusion has undergone to ensure it's a reliable solution.

- Pay-Per-Hour Flexibility: Discuss the cost efficiency that Stable Diffusion brings, allowing for pay-per-hour pricing models.

- Amazon-Backed Security: How Stable Diffusion integrates with Amazon's top-tier security measures.

- User-Centric Data Control: Explaining how Stable Diffusion allows for comprehensive data control on the user's part.

- Automatic Safety Nets: How Stable Diffusion features ensure that there’s a backup and restore function to protect user data.

- Customer Support: The role of customer support in enhancing the user experience with Stable Diffusion technology.

- Integrated Tools: Detailing the productivity-enhancing features that come with a Stable Diffusion-based interface like Fooocus.

- Transparent Pricing: How Stable Diffusion allows for straightforward pricing with no hidden costs.

- Regular Updates: The importance of continuous updates in keeping Stable Diffusion technology at the forefront of the industry.

- GDPR Compliance through Self-Hosting for Stable Diffusion

Data Control and Privacy: By deploying Stable Diffusion via Meetrix's AMI on AWS, organizations take advantage of self-hosting capabilities. This approach allows for comprehensive control over the AI's data processing and storage, ensuring adherence to GDPR by safeguarding user data and maintaining privacy.

Secure Data Processing: The self-hosted Stable Diffusion setup ensures that all interactions, including image generation requests and outputs, are processed within the secure confines of the organization's AWS environment. This setup is instrumental in preventing unauthorized access to sensitive data and supports GDPR's strict data protection requirements.

- Comprehensive Commercial Support for Stable Diffusion

Tailored Deployment Strategy: Meetrix provides commercial support tailored to the unique needs of organizations deploying Stable Diffusion. This includes guidance on setting up a GDPR-compliant AI environment, advice on secure data handling, and ensuring that the AI model's deployment aligns with privacy regulations.

API Support for Enhanced Integration: To streamline the deployment and integration of Stable Diffusion, Meetrix offers extensive API support. This assistance is crucial for organizations looking to integrate this AI model with existing systems or applications, ensuring seamless operation while maintaining GDPR compliance.

Ongoing Security and Compliance Updates: Organizations benefit from Meetrix's commitment to continuous improvement and support. This includes receiving updates and patches that enhance the security of the Stable Diffusion model, along with adjustments to remain compliant with evolving GDPR and other privacy regulations.

Dedicated Technical Support: Meetrix's commercial support extends to providing dedicated technical assistance for deploying and managing Stable Diffusion. This ensures organizations can leverage AI for creative and computational tasks while adhering to the highest standards of data privacy and security as mandated by GDPR.

Setting Up a Cloud Provider Account:

While this guide will primarily focus on AWS, the largest and one of the most versatile cloud providers, the core concepts can be transposed to other platforms like Google Cloud or Azure.

If you haven't already, create an AWS account, ensure you have the necessary permissions, and familiarize yourself with the AWS Management Console. This initial setup will be pivotal in seamlessly deploying and managing Stable Diffusion on the cloud.

Understanding GPU Requirements for Stable Diffusion

Harnessing the robust capabilities of Stable Diffusion demands an intricate blend of power and performance, and this is precisely where GPUs (Graphics Processing Units) enter the narrative.

- Why GPUs are Essential for Deep Learning Models like Stable Diffusion

Deep learning models, with their multi-layered neural networks, require a multitude of parallel computations. GPUs, inherently designed for handling thousands of threads concurrently, are thus indispensable for these models. Their architecture is tailored for the kind of matrix operations and transformations quintessential in AI computations. For a model like Stable Diffusion, which entails high-dimensional data processing for text-to-image synthesis, GPU acceleration is not just beneficial—it's vital.

Comparison of Local GPU vs. Cloud GPU:

Local GPU

- Pros: Direct access, consistent performance, no internet bandwidth considerations.

- Cons: High upfront costs, potential for rapid hardware obsolescence, limited scalability.

Cloud GPU

- Pros: Scalable infrastructure, no upfront hardware costs, access to state-of-the-art GPU models, enhanced collaboration opportunities.

- Cons: Recurring costs, dependency on internet connectivity, and potential latency issues.

Given the pros and cons, for individuals or organizations that prioritize flexibility, scalability, and staying updated with cutting-edge technology, cloud GPUs emerge as the preferable choice.

Setting Up on AWS

The powerhouse of cloud computing, Amazon Web Services (AWS), has been at the forefront of empowering AI applications by offering a broad spectrum of tools and functionalities. Let's dive deep into the process of priming AWS for running Stable Diffusion.

EC2 GPU Instances

Amazon Elastic Compute Cloud (EC2) is the backbone of AWS's computing power, offering flexible and scalable virtual servers in the cloud.

Choosing the Right GPU Instance

AWS offers a myriad of GPU instances tailored for an array of tasks, including computational fluid dynamics, deep learning, and graphics-intensive applications. For an AI model like Stable Diffusion that requires hefty computational resources

- Consider the p3 and p4 series. These instances come equipped with NVIDIA Tesla V100 and NVIDIA A100 Tensor Core GPUs, respectively, offering substantial memory and processing prowess.

- It's crucial to understand your workload. For sporadic tasks, a p3 instance might suffice. However, for larger, more intensive workloads, a p4 instance might be the apt choice.

Pricing and On-demand vs. Reserved Instances

The beauty of AWS is the flexibility in pricing.

- On-demand Instances: Ideal for short-term, sporadic workloads where predictability isn't a concern. You pay for computing capacity by the hour or second, depending on the instances you launch.

- Reserved Instances: Perfect for predictable workloads. They provide discounted hourly rates and capacity reservations for your instance. Choose from 1 or 3-year terms, with options to pay upfront, no upfront, or partial upfront.

Security and Access

While AWS provides mighty computational tools, ensuring the sanctity and security of your applications and data is paramount.

Setting Up Security Groups

- Think of security groups as virtual firewalls. Each group houses a set of rules, defining which traffic is allowed into and out of your EC2 instances.

- Be judicious when setting up rules. For instance, allowing SSH traffic only from known IP addresses can greatly enhance security.

Ensuring SSH Access

- Once you launch your EC2 instance, you can connect to it securely using the Secure Shell (SSH) protocol.

- During the setup phase, AWS prompts you to set up key pairs (private and public keys). Safeguard the private key—it's your ticket to accessing your EC2 instance.

- To SSH, use the command

Installing Necessary Software

No deep learning task can kick off without the right environment, and Stable Diffusion is no exception.

CUDA Drivers for GPU

- CUDA, NVIDIA’s parallel computing architecture, is instrumental for the GPU to communicate with the software.

- Once your EC2 instance is up, download and install the appropriate CUDA toolkit version from NVIDIA's official site. Make sure the version you choose is compatible with your other software dependencies.

Dependencies for Stable Diffusion

- Depending on the framework and version of Stable Diffusion you're working with, you may need to install specific Python libraries or other tools.

- Refer to Stable Diffusion's official documentation to ensure you've installed all the necessary prerequisites. It's always a good practice to use virtual environments (like venv or conda) to manage these dependencies and avoid conflicts.

Remember, setting up AWS requires attention to detail. The aim is to create a secure, scalable, and cost-effective environment that seamlessly hosts and accelerates your Stable Diffusion tasks.

How to Deploy Stable Diffusion on AWS

Transitioning from a local environment to a cloud platform can be intimidating. However, the flexibility and power cloud solutions provide are unmatched. Here's a step-by-step guide to deploying Stable Diffusion on your AWS cloud.

- Cloning the Stable Diffusion Repository

- Before running Stable Diffusion, you need its source code on your EC2 instance.

cd [Repository Name]

This assumes you have Git installed on your EC2 instance. If not, simply run “ sudo yum install git -y “ to install Git.

- Running the Model on the Cloud Instance

- With the code now on your server, navigate to the root directory of Stable Diffusion.

- Run any necessary setup scripts or commands as mentioned in the repository’s README or official documentation.

Note: Make sure to replace [name-of-the-script].py with the actual name of the startup script for Stable Diffusion.

- Adjusting Configurations for Optimal Performance

- Many deep learning models, including Stable Diffusion, offer configuration files where you can set parameters, batch sizes, and other attributes.

- Optimize these configurations to make the most of your AWS GPU resources. If unsure about optimal settings, refer to community forums or Stable Diffusion's documentation for recommendations.

Accessing and Using the WebUI

One of the most thrilling aspects of Stable Diffusion is its ability to generate images directly from textual input via a user-friendly WebUI.

Launching the Stable Diffusion WebUI

- Typically, modern AI models like Stable Diffusion come with pre-built WebUIs. Once you’ve started the model on your EC2 instance, you can usually access the WebUI by navigating to the public DNS of your instance on the specified port. For example:

Ensure that your security group allows incoming traffic on the port Stable Diffusion's WebUI runs on.

Tips for Efficient Text-to-Image Generation:

- Precision in Phrasing: Be clear and concise with your textual inputs. Instead of "a big dog", try "a large golden retriever on a green meadow" for more accurate results.

- Experiment: Sometimes, slight rephrasing or adding additional context can result in vastly different and potentially better image outputs.

- Monitor Resources: AWS EC2 provides metrics to monitor CPU, GPU, and memory usage. Keeping an eye on these can help you identify if you need to adjust configurations or upgrade your EC2 instance type for smoother performance.

Leveraging the cloud for Stable Diffusion ensures you get top-notch image results without hefty investments in local infrastructure. As with any deployment, continuous learning and adjustments will optimize your experience and output.

Costs and Optimization

Navigating the cost structure of AWS, especially with GPU instances, can initially seem complex. But with a deeper understanding and some smart optimization tactics, you can achieve efficiency without compromising on performance.

Understanding AWS GPU Instance Costs,

- AWS EC2 GPU instances, especially the p and g series, are optimized for compute-heavy tasks like deep learning.

- The cost primarily depends on the type and size of the instance and the region you're operating in. Always refer to the AWS official pricing page for the most up-to-date rates.

- Additionally, data transfer and storage costs (e.g., for EBS volumes) might add to your bill.

Tips to Minimize Costs,

- Stopping Instances: Always remember to stop your EC2 instance when not in use. While stopped, you only pay for storage, which is significantly less than running costs.

- Reserved Instances: If you're planning long-term usage, consider purchasing reserved instances. They offer substantial discounts compared to on-demand pricing.

- Spot Instances: These are unused EC2 instances that can be leveraged for a fraction of the price. However, they can be terminated if someone bids higher, so they're best for flexible, non-urgent tasks.

- Budget Alerts: Set up AWS Budgets to alert you if your spending exceeds a predetermined threshold.

Scaling Considerations,

- While individual GPU instances are powerful, sometimes you may need more. AWS offers EC2 Auto Scaling to adjust capacity based on requirements.

- Consider using Elastic Load Balancing to distribute incoming application traffic across multiple targets, such as EC2 instances.

Advantages of Using Cloud GPU for Stable Diffusion

Leveraging cloud resources, particularly GPUs, for applications like Stable Diffusion can significantly elevate the efficiency and output quality. Here are some of the undeniable advantages:

- Flexibility and Scalability: Cloud platforms like AWS allow you to adjust resources on the fly. Need more power? Upgrade your instance. Need to cut costs? Downgrade or stop your instance. You're in control.

- Access to State-of-the-art GPU Hardware without Upfront Investment: Purchasing and maintaining cutting-edge GPU hardware can be prohibitively expensive. Cloud providers let you rent the best, ensuring you always have access to the latest tech without the capital expenditure.

- Collaboration and Sharing Capabilities: Cloud platforms inherently promote collaboration. Team members can access the instance, share data, or even replicate environments, facilitating smoother team projects.

Incorporating cloud GPU for Stable Diffusion not only ensures optimal performance but also promotes a flexible, collaborative, and cost-effective working environment. With cloud resources at your disposal, the sky's the limit for what Stable Diffusion can achieve.

Key Points to Refer While Running Stable Diffusion in a Cloud Server

Hardware Acceleration & Performance Metrics

- Cloud GPU Acceleration: Explain how cloud GPUs, with their massively parallel processing capabilities, significantly accelerate deep learning processes like Stable Diffusion.

- Monitoring Performance: Dive into how AWS offers tools like Amazon CloudWatch to monitor GPU utilization, memory usage, and inference time. This helps in ensuring the most efficient usage of resources.

EBS Volumes & Data Persistence

- EBS Overview: Provide a primer on Elastic Block Store and how it offers persistent storage, crucial for deep learning tasks.

- Handling EBS: Guide on attaching, resizing, and backing up EBS volumes, essential for preserving model weights, datasets, and generated images.

Advanced Configuration

- GPU Optimization: Describe how tweaking batch sizes can ensure the GPU is being fully utilized without causing out-of-memory errors.

- Hyperparameter Tuning: Discuss how different hyperparameters can impact the performance of Stable Diffusion on cloud platforms and provide a guide on some recommended settings.

Autoscaling & Load Balancers

- Dynamic Scaling: Elaborate on AWS Auto Scaling, which dynamically adjusts the number of GPU instances based on demand, ensuring optimal resource utilization.

- Load Balancing: Dive into how AWS Load Balancers distribute incoming application traffic, enhancing availability and fault tolerance.

Data Transfer & Storage Solutions

- Efficient Data Transfers: Describe the benefits of tools like AWS DataSync or S3 Transfer Acceleration for speedy data transfers.

- Storage Solutions: Explore how Amazon S3 can be a secure and scalable solution for storing generated images or even training datasets.

Integrating with Other AWS AI/ML Services

- Amazon SageMaker: Delve into how SageMaker can integrate with Stable Diffusion for seamless model training and deployment processes.

- Serverless Post-Processing: Highlight the advantages of using AWS Lambda for processing generated images without the need for provisioning or managing servers.

These points provide a holistic view of the entire process, from setup to optimization, and integrating various AWS services for a streamlined workflow. They will indeed enhance the article's depth and comprehensiveness.

Potential Pitfalls and Troubleshooting

Setting up and running complex models like Stable Diffusion on cloud GPUs is not without its challenges. Here are some commonly faced issues and how you can tackle them:

- Insufficient GPU Memory: Stable Diffusion, being a deep learning model, can be memory-intensive. If you receive memory errors, consider upgrading to a more robust GPU instance or optimizing your model to use less memory.

- Software Dependency Conflicts: With evolving software libraries, there can be version conflicts. Ensure you're using compatible versions of all software and libraries. Check the model’s official documentation or repository for recommended software versions.

- Network Latency: When accessing your model over the internet, you might face latency issues, especially with large data transfers. Opt for regions closest to your primary user base and use services like Amazon CloudFront to optimize content delivery.

- Security Concerns: Exposing your instance to the internet can be risky. Always ensure your security groups are tight, only allowing necessary ports to be open and constantly monitoring for any suspicious activity.

Tips for Troubleshooting

- Logs Are Your Friend: Most issues will generate error logs. Make a habit of checking them to understand the root cause of any problem.

- Community Forums: Platforms like AWS have extensive community forums. Chances are someone else has faced the same issue and found a solution.

- Keep Backups: Before making significant changes, always back up your configurations and data. AWS offers snapshot features for this purpose.

Conclusion

Running Stable Diffusion on a cloud GPU offers unparalleled flexibility, scalability, and power, without the need for significant upfront hardware investments. By following the steps outlined in this guide, you're well on your way to leveraging this powerful model in the cloud environment. As with any technological endeavor, challenges will arise, but with the right knowledge and resources, they're easily overcome.

Remember, the cloud offers a sandbox for experimentation. So, dive in, explore, and push the boundaries of what's possible with Stable Diffusion and cloud GPUs. Your next big innovation might be just around the corner. Happy coding!