How to Install Mixtral 8x7B in AWS Easily: Open Source Self-Hosted AI Model

Introduction to Mixtral 8x7B

In the dynamic realm of Artificial Intelligence (AI), language models stand as pillars of technological innovation, revolutionizing how we interact with machines and data. These models, driven by complex algorithms and vast data sets, have redefined the boundaries of machine learning and natural language processing. Among the most notable advancements is the emergence of Mixtral 8x7B, a model developed by Mistral AI that is reshaping our understanding of AI capabilities.

Mistral AI and the Emergence of Mixtral 8x7B

Mistral AI, a trailblazer in the AI industry, has made significant strides with the release of Mixtral 8x7B. This model is not just an incremental improvement over its predecessors but a monumental leap forward. With its innovative architecture and impressive processing power, Mixtral 8x7B has set new benchmarks in language modeling, challenging the status quo of existing AI models.

Meetrix.io: Simplifying the Deployment of Mixtral 8x7B

Understanding the complexities of deploying advanced AI models, Meetrix.io has played a crucial role in bringing Mixtral 8x7B's capabilities to a broader audience. By developing specialized Amazon Machine Images (AMIs), Meetrix.io has streamlined the deployment process, making it accessible even to those with limited technical expertise. This initiative not only democratizes access to cutting-edge AI technology but also empowers businesses and developers to harness the full potential of Mixtral 8x7B in their digital ecosystems.

As we delve deeper into the intricacies of Mixtral 8x7B and its impact on the AI landscape, it's evident that this model is not just an AI tool but a beacon of innovation, guiding us toward a future where AI and human intelligence collaborate seamlessly.

Background of Mixtral 8x7B: A Visionary Leap in AI Language Modeling

The Genesis of Mixtral 8x7B within Mistral AI

Mistral AI, renowned for its pioneering efforts in AI, embarked on a visionary project to develop a language model that transcends traditional boundaries. This led to the birth of Mixtral 8x7B, a model conceived not just to advance AI technology but to redefine it. The development of Mixtral 8x7B was driven by a deep understanding of the limitations inherent in existing models and a bold ambition to overcome these barriers.

Objectives and Ambitions Driving Mixtral 8x7B's Development

At the core of Mixtral 8x7B's development were several key objectives:

- Enhanced Performance and Efficiency: Mistral AI aimed to create a model that not only delivers superior performance but also operates with remarkable efficiency. Mixtral 8x7B was designed to outperform existing models while optimizing computational resources.

- Multilingual and Multifaceted Applications: Recognizing the global nature of AI applications, Mixtral 8x7B was developed to excel in multiple languages and cater to a wide range of tasks, from natural language understanding to complex code generation.

- Open-Source Accessibility: Mistral AI is committed to making Mixtral 8x7B an open-source model, ensuring its accessibility to the wider AI community. This approach fosters innovation and collaborative development, allowing developers worldwide to contribute to and benefit from Mixtral 8x7B.

- Setting New Benchmarks in AI: The overarching ambition behind Mixtral 8x7B was to set new standards in AI language modeling, challenging existing paradigms and paving the way for future innovations.

The Inception and Evolution of Mixtral 8x7B

The journey of Mixtral 8x7B from conception to realization reflects Mistral AI's commitment to groundbreaking research and development. The team at Mistral AI, comprising leading AI researchers and engineers, undertook extensive experimentation and testing to refine Mixtral 8x7B's capabilities. This relentless pursuit of excellence resulted in a model that not only achieves its intended objectives but also serves as a catalyst for future advancements in AI.

The development of Mixtral 8x7B by Mistral AI marks a significant milestone in the field of AI language models. Driven by ambitious objectives and a visionary approach, Mixtral 8x7B stands as a testament to Mistral AI's commitment to advancing AI technology and its potential to reshape various industries and applications. As we explore Mixtral 8x7B's technical intricacies in the following sections, we gain a deeper appreciation of its impact and the innovative spirit that fueled its creation.

A to Z Full Developer Guide

Technical Overview of Mixtral 8x7B

The Architectural Ingenuity of Mixtral 8x7B

Mixtral 8x7B, a creation of Mistral AI, stands as a marvel in the realm of AI language models, primarily due to its unique architectural design - the Sparse Mixture of Experts (SMoE). This architecture is a radical departure from conventional models, offering a blend of efficiency and power that was previously unattainable.

- Sparse Mixture of Experts (SMoE) Architecture: At its core, Mixtral 8x7B leverages an array of specialized 'expert' networks, each adept at handling different types of data and tasks. This not only enhances the model's overall performance but also ensures more efficient processing, as only relevant experts are engaged for specific inputs.

- Efficient Parameter Utilization: Despite its vast parameter count of 46.7 billion, Mixtral 8x7B operates with the agility of a much smaller model. Each token processed by the model engages only a fraction of these parameters, approximately 12.9 billion, ensuring speed without compromising depth.

Model Specifics: Innovations and Capabilities

- High-Speed Inference: One of the most notable achievements of Mixtral 8x7B is its inference speed. The model operates six times faster than its predecessor, Llama 2 70B, setting a new benchmark in processing efficiency.

- Multilingual Proficiency: Emphasizing its global applicability, Mixtral 8x7B is proficient in multiple languages including English, French, Italian, German, and Spanish. This multilingual capability extends its utility across diverse linguistic landscapes, making it a versatile tool for international applications.

- OpenAI API Compatibility: In a strategic move, Mistral AI has designed Mixtral 8x7B to be compatible with OpenAI’s standards. This compatibility ensures that users already familiar with OpenAI's ecosystem can transition to using Mixtral 8x7B with minimal friction.

- Advanced Context Handling: The model's ability to manage up to 32,000 tokens grants it the capacity to handle extensive data inputs. This capability is crucial for deep analysis and complex generation tasks, allowing the model to maintain context over longer conversations or documents.

Mixtral 8x7B is not just another addition to the landscape of AI language models; it is a groundbreaking innovation that redefines the standards of efficiency, versatility, and depth in AI processing. As we delve deeper into its performance and benchmarking in the next section, the true extent of Mixtral 8x7B's capabilities will become even more apparent.

Technical Overview

- Architecture: Sparse mixture of experts.

- Model specifics: Parameter count and innovations.

Mixtral 8x7B Instruct v0.1: A Technical Marvel

Mixtral 8x7B Instruct v0.1 is a groundbreaking model developed by Mistral AI. It’s characterized by its sparse mixture-of-experts architecture, offering a remarkable 6x faster inference than Llama 2 70B. With the ability to handle a context of 32k tokens and support multiple languages, including English, French, Italian, German, and Spanish, it excels in both code generation and natural language processing tasks.

Key Features

- High-Speed Performance: The model boasts a 6x faster inference rate compared to its predecessors, making it highly efficient for complex AI tasks.

- Multilingual Support: With its proficiency in several languages, Mixtral 8x7B is versatile and adaptable to global AI applications.

- OpenAI API Compatibility: Designed to align with OpenAI standards, ensuring a seamless experience for users accustomed to OpenAI's ecosystem.

- Advanced Context Handling: Capable of managing up to 32k tokens, it offers deep and comprehensive analysis and generation capabilities.

Performance and Benchmarking of Mixtral 8x7B: A Comparative Analysis

In the competitive arena of AI language models, the true mettle of a model like Mixtral 8x7B is tested through rigorous benchmarking and comparative analysis. Let's delve into how Mixtral 8x7B stacks up against some of the most prominent models in the industry - LLaMa 70B, GPT-3.5, and Mistral AI 7B.

Comparative Analysis with LLaMa 70B and GPT-3.5

- Benchmarks Against LLaMa 70B: When juxtaposed with LLaMa 70B, Mixtral 8x7B showcases a distinct advantage, especially in terms of inference speed and efficiency. Despite LLaMa 70B's larger parameter size, Mixtral 8x7B's unique architecture allows it to deliver faster responses without compromising on the depth or accuracy of its outputs.

- Performance Comparison with GPT-3.5: GPT-3.5 has been a benchmark for AI language models in terms of output quality and versatility. Mixtral 8x7B, in numerous benchmarks, has been shown to either match or exceed the capabilities of GPT-3.5. This is particularly notable in multilingual tasks and complex coding challenges.

Benchmarking Results and Highlights

The performance of Mixtral 8x7B has been rigorously tested across a variety of benchmarks, including MT-Bench for multilingual tasks, HumanEval for programming, and several NLP-specific challenges.

- Multilingual Capabilities: In MT-Bench tests, Mixtral 8x7B demonstrated superior proficiency across multiple languages, a critical feature in today's global digital landscape.

- Code Generation: In coding-related tasks, Mixtral 8x7B has shown a remarkable ability to generate accurate, efficient code, often outperforming its competitors.

- Natural Language Processing: Across various NLP benchmarks, Mixtral 8x7B has consistently produced high-quality, contextually accurate responses, showcasing its advanced understanding and processing capabilities.

Mixtral 8x7B's Unique Selling Points

- Efficiency and Speed: The model's swift response time, coupled with its ability to handle extensive data, positions it as a leader in efficient AI processing.

- Versatility in Applications: The model’s performance in benchmarks suggests a wide range of applications, from language translation to sophisticated programming tasks.

- Cost-Performance Balance: Perhaps one of Mixtral 8x7B's most compelling attributes is its balance of cost and performance, offering state-of-the-art AI capabilities without exorbitant computational expenses.

Mixtral 8x7B by Mistral AI not only competes with but in many instances, surpasses the capabilities of some of the most advanced AI models available today. Its blend of efficiency, versatility, and cost-effectiveness sets it apart, making it an invaluable asset in various AI-driven applications. As we move forward, exploring its multilingual capabilities will shed more light on its global applicability.

Multilingual Capabilities of Mixtral 8x7B: Bridging Language Barriers

In today's interconnected world, the ability of an AI model to understand and interact in multiple languages is not just an added advantage but a necessity. Mixtral 8x7B, developed by Mistral AI, stands out for its proficiency in a range of languages, making it a powerful tool in global communication and AI applications.

Proficiency Across Languages

- Range of Languages: Mixtral 8x7B is adept in several major languages, including English, French, Italian, German, and Spanish. This multilingual capability expands its utility across different linguistic landscapes.

- Language-Specific Performance: Each language presents its unique nuances and challenges. Mixtral 8x7B, through its advanced architecture, manages to grasp and respond to these subtleties, showcasing an understanding that goes beyond mere word-to-word translation.

Effectiveness in Diverse Linguistic Contexts

- Contextual Understanding: Beyond basic language proficiency, Mixtral 8x7B demonstrates an impressive ability to understand the context in different languages. This means it can handle idiomatic expressions, cultural references, and language-specific humor with a high degree of accuracy.

- Code Switching and Blending: In a global setting, conversations often involve a mix of languages or code-switching. Mixtral 8x7B's ability to seamlessly handle such scenarios makes it an invaluable asset in international business, customer service, and social media interactions.

Implications of Multilingual Capabilities

- Broader Reach and Inclusion: The multilingual nature of Mixtral 8x7B opens doors for its application in diverse regions, catering to a broader audience and ensuring linguistic inclusivity.

- Enhanced Language Learning Tools: Mixtral 8x7B can be a potent tool in language learning and translation applications, providing users with accurate, context-aware translations and language learning support.

- Cultural Sensitivity and Localization: The model's ability to understand cultural nuances within language makes it ideal for localization efforts, ensuring that content is not just translated but culturally adapted.

The multilingual capabilities of Mixtral 8x7B are a testament to the strides being made in AI language models toward true global communication. By effectively handling multiple languages, Mixtral 8x7B sets itself up as an essential tool for businesses, educators, and developers looking to make their mark in a linguistically diverse world. As we continue to explore the depths of this model's capabilities, it’s clear that Mixtral 8x7B is not just a technological marvel but also a bridge between cultures and communities.

How to Install Mixtral 8x7B on AWS: Comprehensive Guide

Quick Developer Guide

Deploying advanced AI models like Mixtral 8x7B can be a complex process, but with the right tools and knowledge, it can be made significantly easier. Below, we delve into the essentials for deploying Mixtral 8x7B, highlighting its instructed model capabilities and detailing the process for deploying it on AWS using Meetrix’s AMI.

Prerequisites for Deployment

- Familiarity with AWS Services: A basic understanding of AWS services, particularly EC2 instances and CloudFormation, is crucial. This knowledge will aid in navigating the AWS ecosystem and leveraging its capabilities for deploying Mixtral 8x7B.

- Have Basic Knowledge of AWS Services: Familiarize yourself with AWS services, particularly EC2 instances and CloudFormation.

- Possess an Active AWS Account: Ensure you have an AWS account with the necessary permissions.

Instructed Models: Fine-Tuned for Precision

Mixtral 8x7B Instruct: This variant of Mixtral 8x7B has been optimized through supervised fine-tuning for precise instruction, reaching a score of 8.30 on MT-Bench. This makes it not only highly efficient but also comparable to leading models like GPT3.5.

Deployment on AWS: Simplified and Scalable

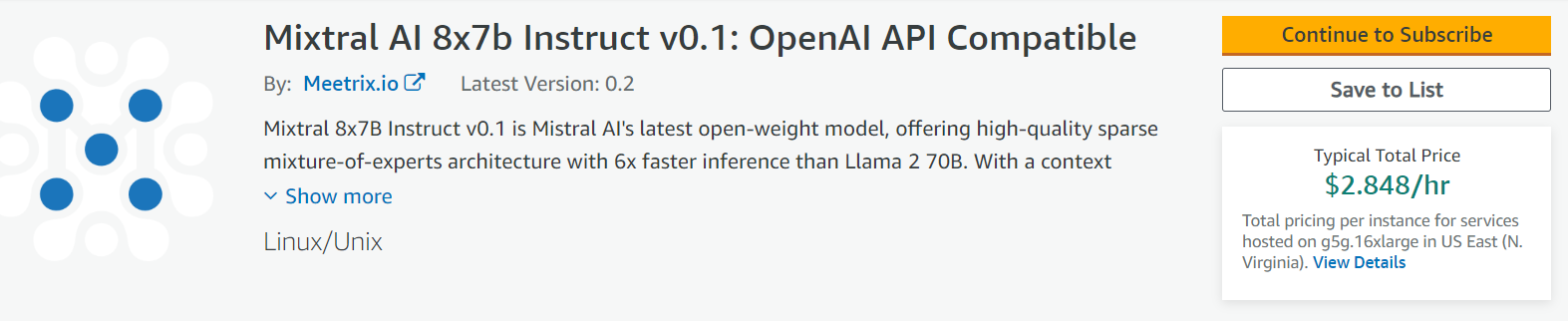

- User-Friendly AMI: Meetrix.io has developed a specialized Amazon Machine Image (AMI), making the deployment process on AWS straightforward, even for those with limited technical expertise.

- Scalability: The deployment solution is designed to cater to various scales, from small startups to large enterprises, ensuring that Mixtral AI can grow with your organization.

- Cost-Efficiency: With a competitive pricing model, Mixtral AI's deployment via AMI is both affordable and efficient, priced at $2.848/hr for a g5g.16xlarge instance.

- GDPR Security with Self-Hosting for Mixtral 8x7B

Data Control and Privacy: Hosting Mixtral 8x7B on your AWS ensures complete control over your data, aligning with GDPR mandates for data privacy and security.

Enhanced Data Protection: Utilize AWS's robust security features to protect sensitive information processed by Mixtral 8x7B, including encryption in transit and at rest.

Custom Security Policies: Implement custom security measures tailored to your organizational needs, ensuring that Mixtral 8x7B adheres to your specific GDPR compliance requirements.

User Consent and Data Management: Facilitates mechanisms for managing user consents and requests related to their data, such as access, rectification, and deletion, ensuring GDPR compliance.

- Commercial Support for Mixtral 8x7B

Effortless Deployment: Access to a pre-configured AMI simplifies the deployment of Mixtral 8x7B on AWS, enabling a swift and efficient setup process.

Expert Guidance and Support: Receive dedicated support from Meetrix for Mixtral 8x7B installation, configuration, and troubleshooting, ensuring optimal performance.

Tailored Customizations: Benefit from customized solutions and integrations for Mixtral 8x7B, enhancing its utility and alignment with your business processes.

Scalability Advice: Leverage expert recommendations on scaling your Mixtral 8x7B deployment effectively, ensuring it grows with your business needs.

API Support for New Deployments: Enjoy comprehensive support for utilizing Mixtral 8x7B APIs for new deployments, facilitating seamless integration with existing systems and support for automation and advanced functionalities.

Mixtral 8x7B Installation Guide on AWS: Step-by-Step

Welcome to the Meetrix Mixtral Developer Guide. This comprehensive guide aims to facilitate the seamless integration of the Mixtral 8x7B model into your AWS environment. Let's walk through the process step-by-step.

Launching the AMI

Step 1: Find and Select 'Mixtral' AMI

- Log In: Access your AWS Management Console.

- Navigate: Go to the AWS Marketplace and search for 'mixtral'.

Mixtral AI 8x7b Multi Model LLM - OpenAI API for ARM64

Mixtral AI 8x7b Multi Model LLM - OpenAI API for x86

Step 2: Initial Setup & Configuration

- Subscribe: Click the "Continue to Subscribe" button.

- Accept Terms: Agree to the terms and conditions and wait for the process to complete.

- Configure: Select "CloudFormation Template for Mixtral deployment" and your preferred AWS region. Then, click "Continue to Launch".

Step 3: Create CloudFormation Stack

- Prepare Template: Ensure "Template is ready" is selected.

- Specify Stack Options:

- Enter a unique "Stack name".

- Provide "Admin Email" for SSL generation.

- Enter "DeploymentName" and a "DomainName".

- Choose "InstanceType" (recommended: g5g.16xlarge).

- Select your "keyName".

- Set "SSHLocation" to "0.0.0.0/0".

- Keep "SubnetCidrBlock" and "VpcCidrBlock" as default.

- Configure Stack: Choose roll-back options and proceed.

Step 4: Review and Deploy

- Review: Ensure all details are correct.

- Acknowledge: Check the box acknowledging IAM resource creation.

- Submit: Click "Submit" to create your CloudFormation stack.

Post-Deployment Steps

Update DNS

- Copy IP Address: From the "Outputs" tab, copy the "PublicIp".

- DNS Configuration: In AWS Route 53, navigate to "Hosted Zones" and update the DNS record with the copied IP address.

Accessing Mixtral

Access the Mixtral application using the "DashboardUrl" or 'DashboardUrlIp' provided in the "Outputs" tab.

Generate SSL Manually (If Needed)

If your domain is not hosted on Route53, you'll need to set up SSL manually using the provided steps in the guide.

Managing Mixtral

- Shut Down: Navigate to the Mixtral instance in EC2 to stop or restart as needed.

- Removal: Delete the created stack from the AWS Management Console if no longer needed.

Technical Support and API Documentation

- Support: For assistance, contact Meetrix Support at support@meetrix.io.

- API Documentation: Familiarize yourself with the API documentation for functions like retrieving completions, embeddings, chat completions, and listing models.

This guide provides a detailed, step-by-step approach to deploying Mixtral 8x7B on AWS. For additional information or assistance, Meetrix's support team is ready to help, ensuring you can leverage the full capabilities of Mixtral 8x7B in your AI applications.

Use Cases and Applications of Mixtral 8x7B

The Mixtral 8x7B model, with its advanced capabilities and multilingual support, opens up a plethora of possibilities across various sectors. Below are some practical applications and industries that stand to benefit significantly from implementing Mixtral 8x7B.

1. Content Creation and Digital Marketing

- Automated Content Generation: Mixtral 8x7B can create high-quality, contextually relevant content, aiding in blog writing, article creation, and social media content generation.

- SEO Optimization: SEO Optimization: Utilize the model to generate SEO-friendly NLP-optimized content, enhancing web presence and digital marketing efforts.

2. Customer Service and Support

- Chatbots: Implement advanced chatbots that can handle complex customer queries in multiple languages, improving customer experience.

- Automated Email Responses: Streamline customer communication by auto-generating personalized email responses.

3. Education and Research

- Academic Research: Assist researchers in generating literature reviews, abstracts, and research proposals.

- Educational Tools: Develop educational content, interactive learning modules, and language learning applications.

4. Healthcare

- Medical Documentation: Automate the creation of patient reports and medical research summaries.

- Healthcare Chatbots: Provide instant, accurate responses to general health inquiries, reducing the workload on medical professionals.

5. Finance and Banking

- Market Analysis Reports: Generate insightful financial reports and market analyses.

- Automated Financial Advice: Provide personalized financial advice and investment strategies.

6. E-commerce and Retail

- Product Descriptions: Auto-generate unique, detailed product descriptions.

- Customer Review Analysis: Analyze customer reviews and feedback for business insights and product development.

7. Legal Industry

- Contract Review and Generation: Assist in drafting and reviewing legal documents.

- Legal Research: Quickly generate summaries of legal precedents and case law.

8. Entertainment and Media

- Scriptwriting and Storytelling: Aid in the creative process of scriptwriting and narrative development.

- News Article Generation: Rapidly create news articles and reports on current events.

9. Language Translation and Localization

- Translation Services: Provide high-quality translation across multiple languages, enhancing global communication.

- Localization of Content: Localize web content and marketing materials for different regions.

10. Software Development

- Code Generation and Review: Generate code snippets and assist in code review processes.

- Documentation Generation: Automate the creation of software documentation and user manuals.

Mixtral 8x7B’s multifaceted capabilities make it a valuable asset across diverse industries. Its ability to process and generate content in multiple languages further amplifies its applicability in the global market, making it an indispensable tool for businesses aiming to leverage AI for growth and efficiency.

Mixtral 8x7B API Documentation Overview

Mixtral 8x7B offers a comprehensive API, providing diverse endpoints to facilitate a wide range of functionalities. These endpoints cater to different needs such as text completion, data embedding, conversational interactions, and model information retrieval. Below is a detailed look at the primary endpoints available in the Mixtral 8x7B API.

1. Endpoint for Retrieving Completions: /v1/completions

- Function: Generates text completions based on the provided prompt.

- Method: POST

- Response Structure:

- Contains the completed text based on the input prompt.

- Includes metadata such as the request ID and token usage.

2. Endpoint for Retrieving Embeddings: /v1/embeddings

- Function: Generates embeddings for the provided input text, useful in tasks like semantic analysis.

- Method: POST

- Response Structure:

- Returns a list of embeddings corresponding to the input text.

- Each embedding represents a vector of numerical values capturing semantic features.

3. Endpoint for Chat Completions: /v1/chat/completions

- Function: Specifically designed for conversational AI, it generates responses based on chat messages.

- Method: POST

Response Structure:

- Provides conversational responses, including the assistant’s replies.

- Useful for chatbot applications and conversational interfaces.

4. Endpoint for Listing Models: /v1/models

- Function: Retrieves a list of available Mixtral 8x7B models.

- Method: GET

- Response Structure:

- Lists available models with details like ID and ownership information.

- Helpful for users to select the appropriate model for their specific requirements.

Utilizing the API

To effectively use the Mixtral 8x7B API, developers should:

- Ensure proper formatting of request bodies as per the endpoint requirements.

- Understand the response structure to parse and utilize the data correctly in their applications.

- Be aware of any rate limits or quotas that might apply to API usage.

The Mixtral 8x7B API is a versatile tool for developers, enabling the integration of advanced language processing capabilities into various applications. From generating human-like text to creating chatbots and analyzing text data, the API opens up a wide array of possibilities in AI-driven development.

Conclusion: The Impact and Future of Mixtral 8x7B

As we look back at the remarkable journey of Mixtral 8x7B by Mistral AI, it's clear that this model has significantly impacted the AI language model landscape. Developed with the cutting-edge sparse mixture of expert architecture, Mixtral 8x7B represents a new era in AI, offering a blend of efficiency, flexibility, and power that was previously unattainable.

Summarizing Mixtral 8x7B’s Impact

- Technological Breakthrough: Mixtral 8x7B has set a new standard for AI models with its innovative architecture, enabling faster inference and handling of extensive token contexts.

- Multilingual Mastery: Its proficiency across multiple languages opens doors for diverse applications, breaking language barriers in AI interactions.

- Benchmark Leader: Outperforming giants like LLaMa 70B and GPT-3.5 in various benchmarks, Mixtral 8x7B has proven its capability as a top-tier language model.

Future Prospects and Ongoing Development

- Continued Innovation: Mistral AI is committed to advancing Mixtral 8x7B, with future versions likely to embrace more languages and even more refined AI capabilities.

- Expanding Applications: The model is set to see broader adoption across industries, from healthcare to finance, where its unique capabilities can drive transformation.

- Community Engagement: As an open-source model, Mixtral 8x7B will continue to benefit from community-driven enhancements and diverse use-case exploration.

Meetrix.io’s Pivotal Contribution

- Simplifying Deployment: Meetrix.io has played a crucial role in making Mixtral 8x7B accessible to a broader audience by simplifying its deployment on AWS.

- Bridging Gaps: By offering a user-friendly AMI, Meetrix.io has bridged the gap between advanced AI technology and businesses or developers with varying levels of technical expertise.

- Enabling Scalability: Their infrastructure solutions ensure that organizations of all sizes can leverage Mixtral 8x7B's power, ensuring scalability and cost-efficiency.

Final Thoughts

The journey of Mixtral 8x7B is a testament to the incredible strides being made in the AI field. With Meetrix.io’s support, this model is not just a technological marvel but also a beacon of accessibility and practicality. It stands as a symbol of what the future holds: AI that is not only powerful and advanced but also within reach for innovators and creators around the globe.

As we look forward, Mixtral 8x7B's journey is far from over. It is poised to continue shaping the future of AI, driven by continuous advancements and an ever-growing community of users and developers.