How to Install Llama 2 on AWS via Pre-configured by Single Click

Notice: The Latest Version Available

We are excited to inform you that Llama 3, the latest version, is now available. For a more advanced and optimized experience, consider installing Llama 3 using our comprehensive guide. Click here to read the detailed instructions for installing Llama 3 on AWS with a single click.

LLAMA 3: Quick Video Guide

LLAMA 3 Developer Guide

Additional Help:

- AWS Marketplace Listing: LLaMa 3 Meta AI 8B | LLaMa 3 Meta AI 70B

- Resources: Llama 3's official guides

- Support: Reach out to Meetrix Support for further assistance: hello@meetrix.io

Blog: LLAMA 3

What is Llama 2?

LLaMA, which stands for Large Language Model Meta AI, is a pioneering project launched by Meta in February 2023. Created as part of Meta's broader commitment to open science, this large language model serves as a foundational tool designed to accelerate research in the field of artificial intelligence. Built with an impressive 65 billion parameters, LLaMA aims to set new benchmarks in language understanding and generation. It represents one of the latest and most significant advancements in the rapidly evolving landscape of AI research.

In practical applications, this means that Llama 2 is not just about generating text but doing so with profound understanding and precision. From providing insightful answers and aiding research endeavors to crafting compelling narratives and even simulating human conversation, Llama 2 heralds a new era in AI's capabilities, blurring the lines between machine-generated and human expressions.

Its unparalleled ability to comprehend, generate, and innovate has made it a cornerstone for numerous applications, from content creation to sophisticated data analysis. But while its capabilities are vast, deploying such a model can often seem daunting, especially to those new to the field.

However, for many, the thought of deploying such a sophisticated model can be overwhelming. This is where the convenience of a pre-configured AWS setup shines. It eliminates the complexities of environment setup, offering users an uninterrupted experience to fully leverage Llama 2's prowess with just one click. This synergy between Llama 2 and AWS's streamlined settings doesn't just make cutting-edge AI accessible to all but also fuels swift technological innovations and applications. Join us, as we delve into how Llama 2's potential is amplified by AWS's efficiency.

Requirements for Seamless Llama 2 Deployment on AWS

Before delving into the ease of deploying Llama 2 on a pre-configured AWS setup, it's essential to be well-acquainted with a few prerequisites. These foundational steps ensure that you're adequately prepared to tap into the model's capabilities without any hitches. Let's unpack them:

- Hardware Requirements for Running Llama 2

- RAM: Given the intensive nature of Llama 2, it's recommended to have a substantial amount of RAM. The exact requirement may vary based on the specific model variant you opt for (like Llama 2-70b or Llama 2-13b).

- GPU: A powerful GPU is crucial. NVIDIA's A100 80GB, for instance, is a popular choice among AI enthusiasts for such tasks. Check the specific GPU and VRAM requirements based on the Llama variant you're interested in.

2. Basic AWS Knowledge and Account Setup:

- If you're new to AWS, consider familiarizing yourself with its basic operations. AWS offers comprehensive documentation and beginner-friendly tutorials.

- Ensure that you have an active AWS account. If not, you can set one up in a few straightforward steps on their official website. Remember to keep your credentials safe and handy, as you'll need them for the deployment process.

3. Suitable Server Types:

- EC2 (Elastic Compute Cloud): This web service provides resizable compute capacity in the cloud, making it easier to scale based on Llama 2's requirements.

- Sagemaker: Especially useful if you're looking to integrate Llama 2 into broader machine learning workflows or applications.

- G4 Instances: These GPU instances are tailor-made for AI workloads and are highly recommended for running advanced models like Llama 2.

By ensuring you have the right hardware and a basic understanding of AWS, you set yourself up for a seamless and efficient Llama 2 deployment experience.

What is Meerix's Pre-configured Llama 2 AMI Setup?

Meerix's pre-configured AWS setup might be a simplified deployment solution provided by Meerix for users who want to deploy the Llama 2 model on Amazon Web Services (AWS). This pre-configured setup would likely incorporate best practices for security, cost optimization, scaling, maintenance, and integration, allowing users to deploy the model with ease and without having to manually configure each component.

Enjoy hassle-free deployment with Meerix's pre-configured AWS setup for Llama 2. In just 1-3 minutes, get started with a single click. Retain full control over your data and only pay per hour of hosting. Our solution has undergone rigorous security scans, ensuring your safety from potential vulnerabilities. Plus, with the power of AWS, you're backed by robust security features, eliminating worries about potential loopholes. Looking for backup? It's straightforward. We've extensively tested this setup to ensure its reliability. Opt for our self-hosted solution and save up to 75% on costs, thanks to open-source integrations. Experience the future of SAAS alternatives for SMEs with Meerix!

Why do you waste your time with Llama 2 installation hassle>> Meetrix LLAMA AMI?

Why Pre-configured AWS Setup for Deploying Llama 2?

In today's technologically advanced landscape, businesses and individuals alike seek rapid, efficient, and effective solutions for their computational needs. Leveraging a pre-configured AWS setup when deploying models like Llama 2 offers numerous advantages. Let's delve into the core reasons

Benefits of Pre-configured Llama 2 AWS Setup

- Rapid Deployment: Using a pre-configured setup eliminates the guesswork and significantly speeds up the deployment process. Instead of manually setting up every parameter, users can deploy Llama 2 swiftly, ensuring that projects move forward without unnecessary delays. With the One-Click Deployment feature, set up Llama 2 effortlessly and get started in an instant.

- Optimized Performance: Our Fully pre-configured AMI ensures Llama 2 operates at its pinnacle. Achieve optimal performance without the guesswork. AWS's pre-configured environments are tailored for specific tasks, ensuring that Llama 2 runs at its peak. These environments come with settings and resources that have been tested and refined for optimal performance, so users get the best out of their Llama 2 installations.

- Cost-Efficiency: A pre-configured setup can also be more budget-friendly. By utilizing resources optimized for Llama 2, users can avoid overspending on unnecessary configurations or hardware. Moreover, AWS often provides cost-saving options, making the whole deployment process more economical. With our Low-cost SaaS SME solution and Unbeatable Pricing, experience a 75% reduction in costs without compromising on quality.

- Beginner-Friendly: For those new to AWS or Llama 2 deployment, a pre-configured setup can be a lifesaver. It offers a more straightforward approach, reducing the complexities often faced during manual setups. This means even those with minimal AWS knowledge can deploy Llama 2 confidently.

- Simplicity at its Best: Enjoy a Hassle-Free Experience. Concentrate on your core tasks and let us handle the intricate details of the setup.

- Reliability You Can Trust: Our solution isn't just effective; it's proven. Benefit from our Proven Reliability which has undergone rigorous testing.

- Per Seat Pricing: Embrace the Pay-Per-Hour Flexibility. With our pricing model, you pay only for the hours you utilize, not per user.

- Top-Tier Security: With Amazon-Backed Security, you can have peace of mind knowing that industry leaders are ensuring your data's safety.

- Complete Control: With User-Centric Data Control, you hold the reins. Your data, your rules.

- Safety First: Our Automatic Safety Nets ensure that data loss is a concern of the past, thanks to our backup and restore functionalities.

- Support When You Need: Encounter an Issue? Our Customer Support is readily available to assist with any queries or challenges.

- All-in-One Productivity: With Integrated Tools, access a plethora of features designed to boost your efficiency, all in one consolidated place.

- Transparent Transactions: No hidden agendas. With our Transparent Pricing, you know exactly what you're investing in.

- Stay Ahead of the Curve: Our commitment to excellence means Regular Updates. Benefit from the latest product enhancements and cutting-edge features.

- GDPR Security with Self-Hosting for Llama 2

Complete Data Sovereignty: Utilizing Meetrix's AMI to deploy Llama 2 on AWS guarantees that organizations retain full control over their data, a crucial requirement for GDPR compliance. Self-hosting enhances data sovereignty, allowing for rigorous management and protection of user information.

Data Protection Enhanced: With self-hosting Llama 2 via Meetrix's AMI, businesses ensure that their conversational AI data—whether it's user interactions, training data, or generated content—is processed and stored in compliance with GDPR's strict data protection standards. Encryption and secure data handling practices are inherently designed to safeguard sensitive data against breaches

- Commercial Support for Llama 2

Tailored Deployment Strategies: Meetrix's commercial support extends to offering bespoke deployment strategies for Llama 2, ensuring that the AI model's integration aligns with business objectives while adhering to GDPR requirements and enhancing operational efficiency.

API Integration and Deployment Support: Businesses benefit from API support for new deployments of Llama 2, facilitating seamless integration into existing systems. This support encompasses technical guidance on leveraging Llama 2's API for custom AI solutions, streamlining deployment, and optimizing performance.

Data Compliance Consultation: Meetrix offers specialized consultation services as part of its commercial support, guiding businesses through the intricacies of GDPR compliance when deploying and using Llama 2. This includes advice on data processing, storage, and user consent management to ensure that all interactions are fully compliant.

Restoration and Recovery Services: In addition to deployment support, Meetrix provides expert services for data restoration and recovery for Llama 2 deployments, ensuring that businesses can quickly rebound from data loss incidents while maintaining compliance with data protection regulations.

While manual configurations offer a level of customization, the benefits of a pre-configured AWS setup—ranging from speed to cost-effectiveness—make it a compelling choice for many when deploying Llama 2.

How to Deploy Llama 2 in Single-click at AWS

Step-by-Step Guide: Installing Llama 2

Explanation: Llama 2, developed by Meetix.IO, offers a seamless single-click deployment solution. The Amazon Machine Images (AMIs) are fully optimized for developers, ensuring efficient integration with OpenAI's generative text model capabilities. With this guide, you'll be able to deploy Llama 2 on your AWS environment in no time.

Steps for a smooth one-click setup

Prerequisites:

- Basic knowledge of AWS services.

- An active AWS account.

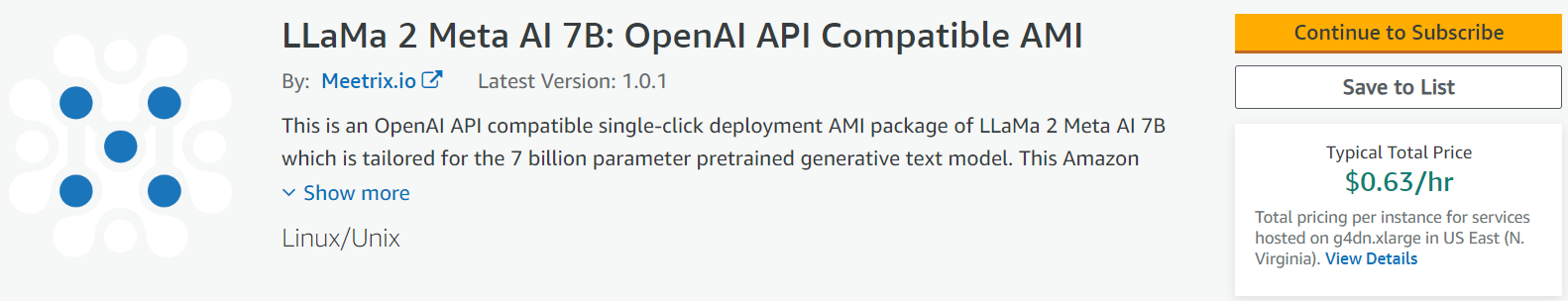

Launching the AMI:

- Log in to AWS MarketPlace Console and select the desired Llama product

(e.g., LLaMa 2 Meta AI 7B, LLaMa 2 Meta AI 13B, LLaMa 2 Meta AI 70B, ).

- Click "Continue to Subscribe" and accept the terms.

- Configure the software by selecting the desired region and proceed to launch CloudFormation.

- Create a CloudFormation Stack, providing necessary details such as Stack name, Admin Email, keyName, and others.

- Ensure the proper configurations and review the details.

- Wait for the stack creation, which typically takes 5-10 minutes.

- Update DNS: Copy the public IP and update DNS in AWS Route 53.

Access Llama: You can now access Llama using the provided Dashboard URL.

- For SSL, if the automatic setup fails, there's a process to manually generate SSL. This involves copying the IP address, logging into the server, and executing specific commands.

For more Guide: Llama - Developer Guide

How about skipping the Llama 2 installation hassle? Try Meetrix LLAMA AMI instead!

Post-installation Steps for Llama 2

Once you've successfully installed Llama 2 on your AWS environment using the single-click AWS deployment or manual installation, it's imperative to ensure that everything is functioning as expected. Here's a guide on the post-installation steps you should take to verify the installation and ensure smooth operations.

Verifying the Llama 2 installation

- Check the AWS CloudFormation Stacks page

- Access the AWS Management Console.

- Navigate to CloudFormation and view the "Stacks" section.

- Ensure that the Llama stack has been successfully created, and there are no errors listed.

2. Access Llama Dashboard

- Using the "DashboardUrl" provided in the "Outputs" tab, open the Llama application dashboard in your web browser.

- The dashboard should load without any errors, confirming the successful installation of Llama 2.

Running tests to ensure the model is operational

- Use API Documentation for Testing

- Based on the provided API Documentation, you can run test commands against the endpoints, such as "/v1/engines/copilot-codex/completions" or "/v1/completions".

- Use sample request bodies to test the model's response.

For example:

{

"prompt": "\n\n### Instructions:\nWhat is the capital of France?\n\n### Response:\n",

"stop": ["\n", "###"]

}

Send the request and verify that the response body returns the expected results, like:

{

"text": "The capital of France is Paris.",

"index": 0

}Integrating with other tools or platforms

Depending on the use case, you might want to integrate Llama 2 with other platforms or tools in your ecosystem.

Integration with Web Applications

- Using the provided API documentation, build integration components in your web application that can send prompts to Llama 2 and receive responses.

- Ensure to handle rate limits, error messages, and other API concerns appropriately.

Note: For any further technical assistance or issues, you can contact Meetrix Support at support@meetrix.io.

Troubleshooting Common Issues

1. Llama 2 Dashboard Not Loading

- Problem: After successful installation, the Llama 2 dashboard does not load or returns an error.

Solution: Check the AWS CloudFormation logs for any errors during setup. Ensure that all required resources, like EC2 instances, RDS, etc., are in a running state.

2. API Endpoint Not Responding

- Problem: When sending a request to the API endpoint, there's no response or an error message is returned.

Solution: Ensure that your API request format matches the documentation. Check if Llama 2 is running and if there's any issue with the API gateway.

3. Resources for Seeking Additional Help:

Comparing Installation Methods: Single-click vs Manual Deployment

Both Single-click and Manual Deployment methods come with their unique advantages and challenges. Here's a comparative analysis of these methods.

Single-click Deployment

Pros

- Simplicity: With just a click, you can deploy Llama 2, making it very user-friendly.

- Time-saving: No need to spend hours configuring settings manually.

- Reduced Errors: Automated processes minimize the chances of human errors during configuration.

- Consistent Setup: Each deployment is standardized, ensuring that the setup is optimal and consistent every time.

Cons

- Limited Customization: The parameters are pre-set, which might not be suitable for users who need a specific configuration.

- Opaque Process: Since everything is automated, users might not fully understand all the backend processes or how to troubleshoot specific issues.

Note: Our Meetrix’s AMI package fulfills all the above cons.

Manual Deployment

Pros

- Flexibility: Gives you full control over the entire deployment process.

- Custom Configuration: Ideal for advanced users who have specific requirements that cannot be met by the standard setup.

- Knowledge Gain: Going through the manual process can provide deeper insights into how Llama 2 interacts with underlying systems and their dependencies.

Cons

- Time-consuming: Setting up everything manually can take a significant amount of time.

- Prone to Errors: Manual configuration increases the chance of human errors which can lead to unstable setups.

- Complexity: Requires a deeper understanding of AWS and other associated technologies. Not beginner-friendly.

Why the pre-configured AWS setup might be the best choice for most users?

The pre-configured AWS setup is designed to cater to a broad spectrum of users, especially those who might not be well-versed with the nitty-gritty of AWS or Llama 2's intricate configurations. Here are some reasons why it stands out,

While manual deployment offers a greater depth of control, the pre-configured AWS setup offers a balance of simplicity, speed, and reliability, making it a favorable choice for most users.

Llama 2 Security: AWS Best Practices

When deploying Llama 2 or any other application on AWS, security is of paramount importance. Following these guidelines will help in ensuring that your application and data remain safe.

Setting up IAM roles for restricted access

- Purpose: By setting up IAM (Identity and Access Management) roles, you can grant permissions that determine who is allowed to interact with resources in your AWS environment.

- Implementation

- Create separate IAM roles for different tasks. For instance, a role for Llama 2 management and another for data access.

- Assign only the necessary permissions to each role, following the principle of least privilege.

- Rotate IAM credentials regularly.

Enabling multi-factor authentication (MFA) for your AWS account

- Purpose: MFA adds an additional layer of security to your AWS account by requiring two or more forms of identification.

- Implementation

- Go to the AWS Management Console and navigate to the IAM dashboard.

- Select the user you wish to enable MFA for.

- Under the 'Security Credentials' tab, choose ‘Manage MFA’ and follow the on-screen instructions.

Server Side Security

Using security groups and firewalls effectively

- Purpose: Security groups in AWS act as a virtual firewall to regulate inbound and outbound traffic to instances, ensuring only approved traffic reaches your application.

- Implementation

- When setting up a security group for Llama 2, allow only the necessary IP ranges and ports.

- Regularly review and update security group rules to ensure they align with changing requirements.

- Limit the access to the admin console or SSH to only trusted IP addresses.

SSL/TLS setup for encrypted connections to Llama 2

- Purpose: SSL/TLS encryption ensures that data transmitted between the client and Llama 2 server is secure and protected from eavesdropping.

- Implementation

- Purchase or obtain an SSL certificate from a trusted Certificate Authority (CA). There are free options available like Let’s Encrypt.

- Install and configure the SSL certificate on your server.

- Redirect all HTTP requests to HTTPS, ensuring data is always encrypted in transit.

By adopting these security measures, you can significantly enhance the security posture of your Llama 2 deployment on AWS. Always remember, that security is an ongoing process, and regular audits and reviews are essential to ensure the resilience of your setup.

Optimizing Costs on AWS

Spot Instances

- Use spare EC2 computing capacity at reduced prices.

- Suitable for flexible, interruptible tasks.

- Use Spot Fleet for automated management and consider on-demand instances for critical tasks.

2. Cost Monitoring Tools

- Utilize AWS Cost Explorer to track spending patterns.

- Set up AWS budgets for spending alerts.

- Act on AWS recommendations to identify savings.

3. Selecting the Right AWS Region

- Choose a region close to your audience for reduced latency.

- Compare service prices across regions.

- Keep in mind data sovereignty and regulatory constraints.

By strategically utilizing AWS offerings, you can ensure efficient performance for Llama 2 while minimizing costs.

Maintenance and Monitoring

1. Setting up AWS CloudWatch: Continuously monitor Llama 2's operational health and performance.

- Create custom CloudWatch Dashboards for Llama 2 metrics.

- Set up CloudWatch Alarms for anomaly detection or to react to predefined thresholds.

- Log important metrics for historical analysis.

2. Regular Backups: Protect data integrity and ensure quick recovery in case of failures.

- Use AWS Backup for automating backup tasks across AWS services.

- Determine backup frequency (e.g., daily, weekly) based on data change rate.

- Store backups in Amazon S3, ensuring versioning and lifecycle policies are in place.

3. Updates and Patches: Maintain the latest security and performance enhancements for Llama 2 and its underlying infrastructure.

- Regularly check Llama 2's official channels or GitHub repository for updates.

- Use AWS Systems Manager Patch Manager to automate patching tasks for EC2 instances.

- Monitor AWS security bulletins for relevant updates.

Note: At Meetix, we understand the complexities of managing cloud deployments. That's why our Llama 2 AMI on AWS has been meticulously crafted to integrate a multitude of essential features for you. From robust security measures, including the best AWS practices, to cost-optimization tools, our AMI has got you covered. We've also streamlined scalability solutions, incorporating load balancers right out of the box. When it comes to maintenance and monitoring, users can have peace of mind knowing that the Meetix Llama 2 AMI comes pre-configured with AWS CloudWatch, automated backup utilities, and timely updates and patches. In essence, our AMI offers a holistic, hassle-free experience, ensuring that deploying and managing Llama 2 on AWS is both efficient and effective.

Integrating Llama 2 with Other AWS Services

Harnessing the full power of AWS means taking advantage of its vast suite of services, and when it comes to integrating Llama 2, there are a few key services that can significantly enhance its functionality:

- Amazon S3: A crucial storage solution, S3 can be used to store model weights, training data, or any auxiliary files related to Llama 2. By integrating with S3, users can seamlessly upload or download data directly to or from their Llama 2 instance, ensuring that all relevant data remains securely stored in a central, accessible location.

- AWS Lambda: This serverless compute service can be a game-changer for Llama 2 integrations. By leveraging Lambda, users can set up event-driven triggers. For instance, whenever there's a model update or a specific input arrives, a Lambda function can be triggered to process this data or perform other auxiliary tasks without the need for a dedicated server.

- Amazon API Gateway: For those looking to make Llama 2 accessible as a service, the API Gateway is an indispensable tool. It enables users to create, publish, and secure APIs seamlessly. Whether you're looking to build a front end for your Llama 2 model or want to integrate it into existing workflows, the API Gateway provides a robust and secure method to expose Llama 2 functionalities to other applications or services.

Incorporating these AWS services with Llama 2 can lead to more dynamic, scalable, and efficient applications, allowing users to build complex workflows with ease.

Deploying and using Llama 2 has never been easier, especially with the pre-configured AWS setup. While manual deployment offers more control, single-click deployment offers simplicity and speed. Whatever method you choose, we encourage you to dive deep into Llama 2, explore its vast capabilities, and elevate your projects to new heights.

Additional Resources - References

- Official Llama 2: Documentation >>

- Llama 2 GitHub Repository: Link to GitHub >>

- Deploying Llama 2 on GCP: Link to GCP guide >>

- Deploying Llama 2 on Azure: Link to Azure guide >>

- Deploying Llama 2 Locally: Link to local deployment guide >>

- Search for Llama 2 Community Support >>